MIT researchers are pioneering a revolutionary strategy to train machine learning models by employing synthetic imagery, exceeding the efficacy of established approaches based on actual photos.

The implementation of StableRep, a system that uses text-to-image models, in particular Stable Diffusion, to produce synthetic pictures using a method referred to as “multi-positive contrastive learning,” is the critical component that allowed for the successful completion of this achievement.

All About the StableRep

Lijie Fan, a doctoral candidate in electrical engineering at MIT and the project’s primary researcher, explained the process. She stated that rather than just providing the model with data, the StableRep method utilizes a strategy that trains the model to grasp high-level ideas through the use of context and variance.

“When multiple images, all generated from the same text, all treated as depictions of the same underlying thing, the model dives deeper into the concepts behind the images, say the object, not just their pixels,” Fan explained in a statement to the press.

The model is instructed to go deeper into conceptual comprehension rather than focusing merely on pixel-level information if the system detects numerous pictures that were created from the same text prompt. This is done by considering these images to be positive pairings.

This novel technique has been shown to perform better than top-tier models that are trained on real pictures, such as SimCLR and CLIP. The problems that arise with data collecting in machine learning are addressed by the StableRep system, which also represents a step forward in the development of more effective methods for training AI.

The technology has the potential to cut down on the onerous expenditures and resources that are connected with traditional methods of data collecting because of its ability to produce high-quality synthetic pictures on demand.

The MIT group stated that there have been substantial problems encountered during the growth of data collecting in machine learning. The procedure has always been labor-intensive and prone to inaccuracies, whether it included the manual capturing of images in the 1990s or the search for data on the internet in the 2000s.

Raw data is data that has not been vetted or otherwise prepared for consumption. According to the researchers, raw data frequently contains biases and does not truly represent real-world circumstances.

Read More: Sign-ups for ChatGPT Plus are on Hold as OpenAI Struggles to Meet Overwhelming Demand

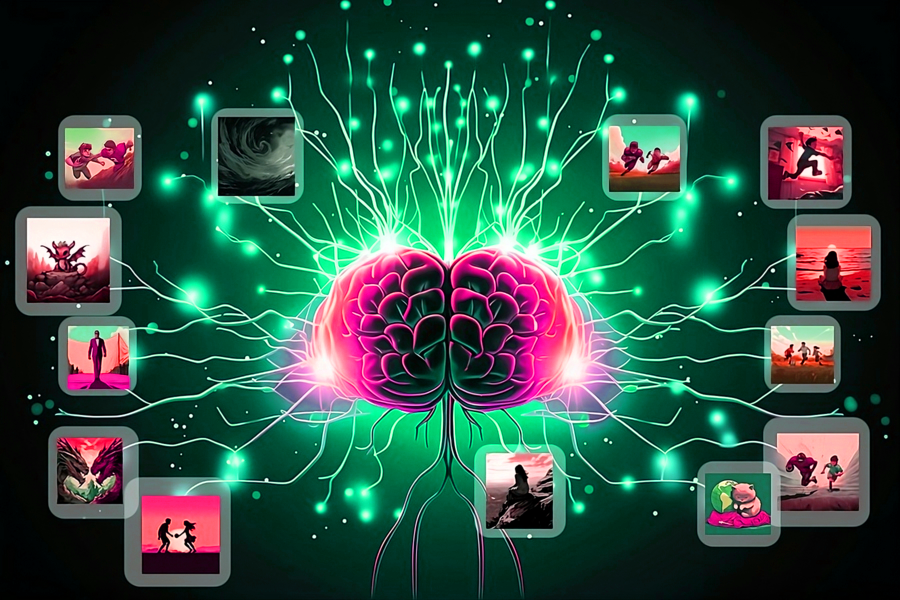

‘Guidance Scale'(MIT Researchers )

The success of StableRep may be credited, in part, to the fine-tuning of the “guidance scale” in the generative model. This struck a balance between the diversity and quality of synthetic pictures, which contributed to StableRep’s overall success. The success of the method that has been disclosed calls into question the idea that enormous real-image datasets are required for the training of machine learning models.

StableRep+ is an improved version that incorporates linguistic supervision into the training process. This contributes to additional improvements in both accuracy and efficiency. However, the researchers concede that there are certain limitations, such as the sluggish pace at which images are generated, the possibility of bias in the text prompts, and the difficulties involved in attributing images.

Concerns regarding hidden biases in uncurated data and the significance of thorough text selection continue to exist despite the fact that StableRep marks considerable progress by minimizing reliance on vast real-image collections.

The researchers highlight the necessity for continued improvements in data quality and synthesis, acknowledging the system’s contribution as a step forward in cost-effective training alternatives for visual learning. However, they stress the need for continuous improvements in data quality and synthesis.

“Our work signifies a step forward in visual learning, towards the goal of offering cost-effective training alternatives while highlighting the need for ongoing improvements in data quality and synthesis,” according to Fan.

Read More: New AI Program Creates Realistic ‘ Talking Heads’-Creepy or Cool?